KEATS (Moodle) allows assignment submissions in many ways – this is a record of how a simple question became an extended investigation.

Academic Staff Requirements

Can I check what my students have previously uploaded?”

An academic colleague had used Blackboard (another Virtual Learning Environment) before coming to the Faculty of Natural, Mathematical & Engineering Sciences (NMES) at King’s. He asked if KEATS, our Moodle instance, could behave like Blackboard and allow students to submit multiple attempts to a programming assignment any time they want.

After a follow-up call with the academic colleague, it became clear that the aim was to be able to access anything students had uploaded prior to their final submission, as the latter might contain a wrong or broken file, and grade with reference to a previous submission (programme code or essay draft).

He had the following requirements:

- Notification emails to both staff and students when a file is uploaded successfully

- Students to be able to submit as often as they want

- Marker to be able to review all uploaded attempts to:

- be able to award marks if an earlier submitted programme code worked fine but a later submission introduced bugs breaking the programme, and

- monitor the programming code development and make comments, compare changes, and to prevent collusion

This investigation looks at practical solutions to administering programming assignments as well as non-programming ones such as essays.

Background: Assessments on Blackboard (VLE)

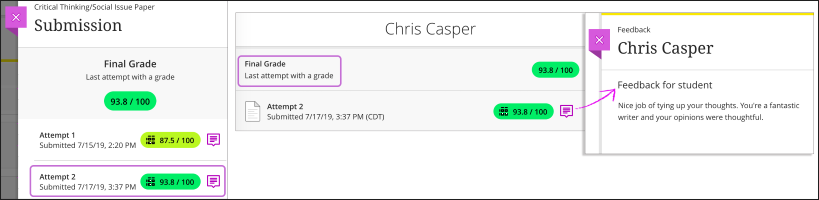

In a Blackboard assignment, students are required to click a Submit button for the markers to access their work. If multiple attempts are allowed, students can submit other attempts at any time, which will be stored as Attempt 1, Attempt 2 and so on and are available for the markers to view. This way, staff can review previous submissions, however, they cannot access drafts.

KEATS: Moodle Assignment

The first step was to investigate the options and settings in Moodle Assignment, which is the tool that was already used by most colleagues for similar assignments.

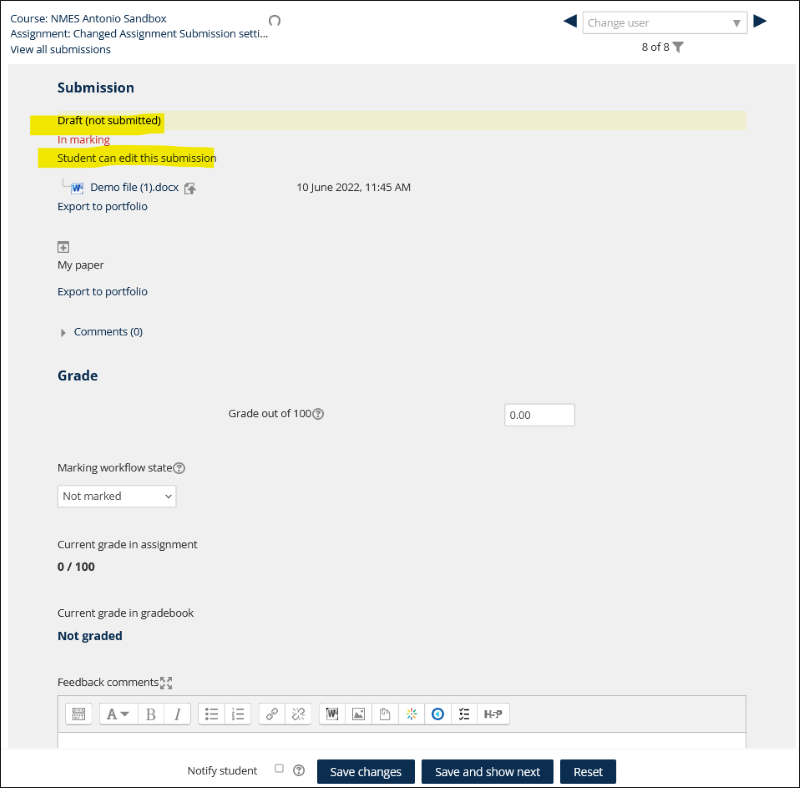

With our current default settings, students can make changes to their uploaded files as much as they want, and submission is finalised only at the assignment deadline. Although instructors can see the latest uploaded files (draft) even before the deadline, files removed/replaced by students will no longer be accessible to staff. This means, only one version is accessible to markers.

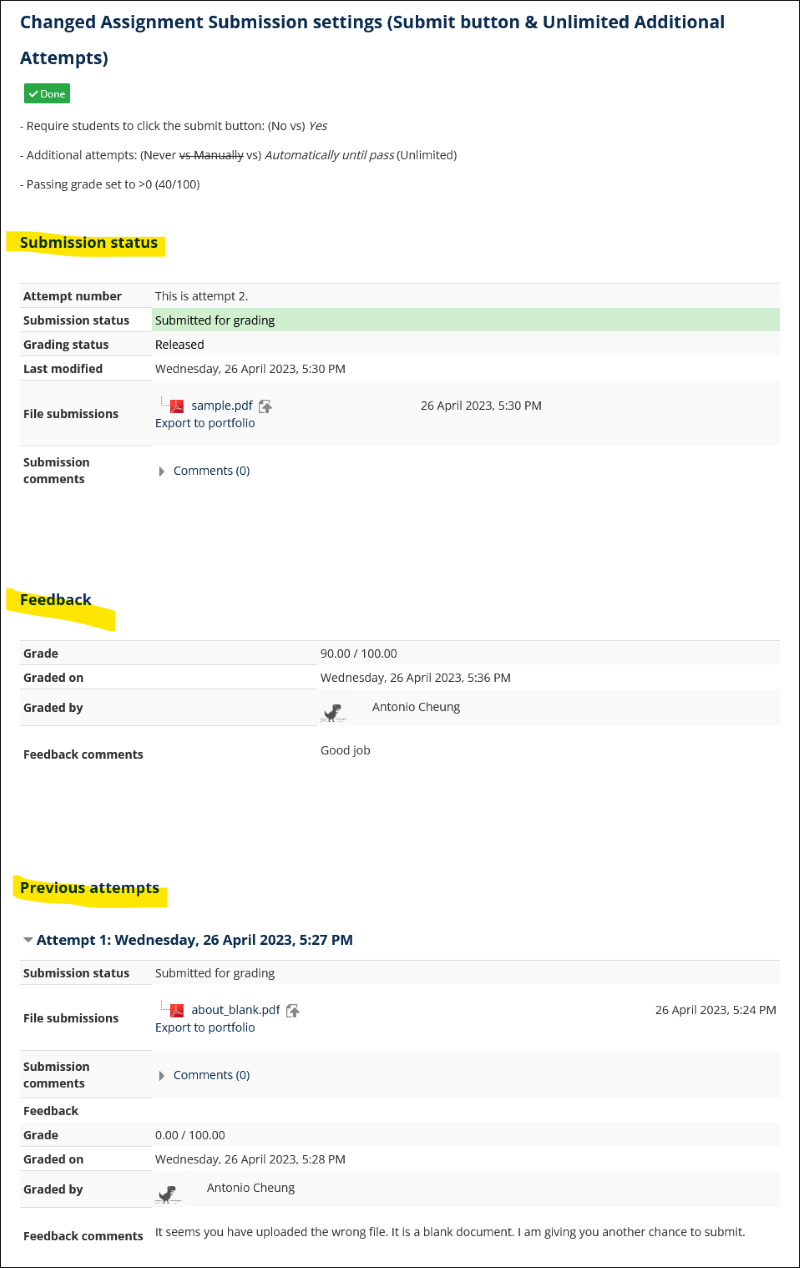

Multiple submissions can be enabled with the use of the “Require student to click the Submit button” setting for staff to review previous attempts, as on Blackboard. Feedback can be left on each attempt. However, students cannot freely submit new attempts because staff need to be involved to manually grant additional attempts to each student. Submissions are time-stamped and can be reviewed by students and markers, but students can only get notification emails after grading whereas markers can get notifications for submissions. Our problem was not resolved yet.

KEATS: Moodle Quiz

We then considered Moodle Quiz, which some departments at King’s already use to collect scanned exam scripts: a Quiz containing an Essay-type question that allows file upload.

While exams usually only allow one single attempt, Moodle Quiz can be set to allow multiple attempts (Grade > Attempts allowed). The “Enforced delay between attempts” setting (from seconds to weeks) under “Extra restrictions on attempts” may be used to avoid spamming attempts. Student can submit new attempts as often as needed because no staff intervention is needed. The drawback is that there is no submission notification or emails, but the quiz summary screen should indicate to the student that the file is submitted. The Quiz attempts page for markers allows for easy review of previous attempts and feedback on each attempt. It is also possible to download all submissions as in Moodle Assignment. This was recommended to the academic colleague as an interim solution while we continued the investigation.

Possible Policy Concerns

Regarding unlimited re-submissions, Quality Assurance colleagues reminded us that students may challenge (i) a perceived inequality in opportunities to get feedback, or (ii) subconscious bias based on previous submissions. Good communication with students and a structured schedule or arrangements should improve expectations from both sides.

Turnitin Assignment and Other Assessment Options

Although the Moodle Quiz appeared to be a solution, we also considered other tools, some of which are readily integrated with KEATS at King’s:

Turnitin assignment allows multiple submissions as an option, but re-submissions will overwrite previously uploaded files. Alternatively, if it is set to a multi-part assignment, each part will be considered mandatory. However, the workflow for Turnitin assignment is not optimal for programming assignments.

Turnitin’s Gradescope offers Multi-Version Assignments for certain assignment types. It is available on KEATS for the Faculty of Natural, Mathematical, and Engineering Sciences (NMES). However, its programming assignment does not support assignment versioning yet.

Edit history is available for Moodle Wiki and OU Wiki; whereas Moodle Forum, Open Forum, Padlet and OU Blog allow continuous participation and interaction between students. These tools could be useful for group programming or other social collaborative learning projects, which is not a direct replacement for an individual programming assignment but an alternative mode of assessment.

Portfolios: Mahara has Timeline (version tracking) as an experimental feature. This may be suitable for essays but not for programming assignments.

Tracking Changes

Tracking changes is an important feature to show development in programming assignments or essays, and cloud platforms (OneDrive, Google Drive, GitHub) can host files and track changes. When used for assignments, student can submit a Share link to allow instructors to access and assess their work and how the work evolved over time. The disadvantage for this option is that the grading experience will be less integrated with Moodle. Some cloud platforms offer a File request feature where students can submit their files to a single location.

Programming Assignments

Industries such as software development use Git as a standard and all changes are tracked. GitHub offers GitHub Classroom, and it can be used with different VLEs including Moodle, but it is not readily integrated with KEATS and requires setup. There may be privacy concerns as students need to link their own accounts.

The Outcomes / Lessons learnt

- This showcases how a simple question from academic colleague can lead to the exploration of various existing options and exploration of new tools and solutions.

- Different options are available on KEATS with their pros and cons.

- Existing tools, possible solutions, policies, and other considerations come into play.

Conclusion / Recommendations

KEATS Quiz matches the case requirements and was recommended to the academic colleague. It went smoothly and our colleague mentioned there were no complaints from students and they are happy with the recommended solution. It is relatively easy to setup and straightforward for students to submit. Clear step-by-step instructions to staff and students should be enough, but trialling this with a formative assignment would also help.

Depending on the task or subject nature, other tools may work better for different kinds of tasks. TEL colleagues are always there to help!

Useful Links

- Using Assignment (Moodle) https://docs.moodle.org/311/en/Using_Assignment

- Question types: Essay (Moodle) https://docs.moodle.org/311/en/Question_types#Essay

- Gradescope: Creating and Grading Multi-Version Assignments (Beta) https://help.gradescope.com/article/khu0k5swco-instructor-assignment-versioning

- GitHub Classroom https://classroom.github.com/

Written by Antonio Cheung

Antonio is a Senior TEL Officer at the Faculty of Natural, Mathematical and Engineering Sciences (NMES).

September 2023