by Clare Birchall, Reader in Contemporary Culture, Department of English

It’s not often, as a theorist, that I have the opportunity to watch my ideas come to life. When the King’s Digital Lab offered to fund a workshop to explore how its designers and engineers might help me to build a digital tool that can enact what I call ‘radical transparency’, I naturally jumped at the chance. In contrast to weak forms of transparency implemented by some public and private bodies that offer the public access to certain data in lieu of political commitment as well as responsibility without power, I envisage radical transparency as processes that would offer meaningful, political and contextualised information and data that promote experiences of political agency for digital users.

As well as the team at King’s Digital Lab, I wanted to draw together artists, computer scientists and other digital theorists to think through the ethical protocols as well as practical possibilities of any form radical transparency might take.

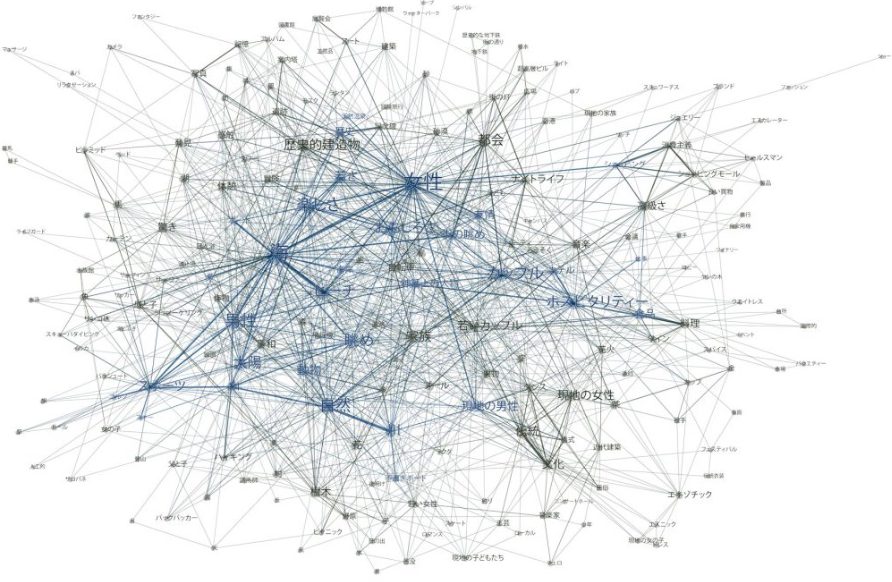

At the workshop on 30 October 2018, we’ll hear from the artist Burak Arikan, who has developed projects like Graph Commons which, taking inspiration from artists and collectives like Mark Lombardi and Bureau D’Etudes, enables people to create intricate maps of power relationships. Seda Guersus, a computer scientist and privacy theorist who splits her time between Leuven University and Princeton, will also be joining us to tell us about some of her work on ideas of digital counter-optimisation, a logic that resists the demands placed upon systems and users for ever greater efficiency over other markers of success.

Does open data from corporations outsource responsibility to the public without offering us the right tools to intervene…?

Why do we need a ‘radical transparency digital tool’? In my book Shareveillance, I describe how citizens are asked to give up, share, view and act upon different forms of data. Data surveillance is one part of this story because it reduces the political potential of citizens to a flat data set; we are only important for the contribution our data makes to an algorithm looking for anomalies. At the same time, the kind of open data transparency on offer from many corporations and states reinforces the neoliberal tendency to outsource responsibility to the public without offering the right tools to intervene. We can, for example, look up school ratings on council websites, but have few opportunities to question the politics behind the data gathered on schools and the criteria for success. Transparency measures like this work on the assumption that technological fixes can be offered in lieu of ethical commitments or social justice.

Do online transparency measures elide issues of ethical conduct?

In this way, online transparency measures themselves become forms of Corporate Social Responsibility, eliding wider issues of ethical conduct. Facebook, for example, publishes a Transparency Report that details the number of government requests for users’ data and queries about intellectual property infringements on its site. Elsewhere, Facebook provides information about its data use policy. Read together, such provisions count as an example of corporate transparency that creates the veneer of openness and accountability, but does little to help users understand the monetisation and networked journeys of their data, or the algorithms that work to customise their online experience on the platform.

The challenge that participants of this workshop will grapple with is to experiment with ways of interrupting the imperatives and protocols at the heart of shareveillance through radical transparency. ‘Radical’ here means not producing more of the same kind of data under the same old regime of shareveillance, but of attempting to change the kind of information that is made visible and, more ambitiously, the conditions of visibility in general. It would be a transparency that does not fall into the trap of reading social problems as information problems, or simply makes inherently inequitable systems more efficient.

‘Radical transparency’ might then be envisaged as a mechanism able to challenge the circumscribed role that data transparency has been given. Radical transparency would allow data subjects to position themselves in relation to data, rather than be positioned by it. The task of this workshop is to imagine how such ideals can be put into practice.

While this workshop is invitation only, Clare Birchall will be discussing its outcomes at the King’s English Department Research Hour on 7 November 2018 (VWB 6.01), to which all are welcome.

Featured image from Wikimedia Commons.

You may also like to read:

Trumping Transparency: The Need for Government Data to be more Empowering

Conspiracy and Enlightenment: ‘Speculations’ series at King’s

Blog posts on King’s English represent the views of the individual authors and neither those of the English Department, nor of King’s College London.