Leading with UX: How we reimagined KEATS for 2025

KEATS, King’s College London’s virtual learning environment, plays a central role in teaching and learning across the institution. While it undergoes regular technical updates – such as Moodle version upgrades, security patches, and minor interface adjustment – recent years have seen a growing emphasis on improving its visual design and alignment with the King’s brand.

The release of Moodle 4 in 2023 provided an opportunity to refresh the platform’s interface, resulting in a cleaner, more modern look and a stronger visual identity. However, due to the scale of the redesign and tight delivery timelines, there was limited scope for user involvement or data validation during that phase.

By 2025, with a more established visual identity in place, the focus shifted toward enhancing the user experience (UX) for students and staff. This phase prioritised functionality, usability, and user-centred improvements – moving beyond aesthetics to ensure the platform better supports everyday use.

A UX-led approach (on a tight deadline)

At the request of Digital Education, the UX team at King’s Digital led a six-week initiative to plan, test, and implement meaningful changes to KEATS. The approach was lean, data-informed, and highly collaborative.

The team conducted a rapid discovery phase, drawing on:

- Findings from previous usability testing

- Results from the System Usability Scale (SUS) survey

- Informal interviews with students and staff

These insights highlighted key areas where small, targeted improvements could significantly enhance the user experience.

Cross-functional UX workshops brought together designers, researchers, developers, and stakeholders to:

- Prioritise changes based on impact and feasibility

- Clarify the rationale behind each proposed improvement

- Define a realistic scope for a one-month delivery window

What we changed (and why it mattered)

Here’s what we delivered and how user feedback shaped every decision.

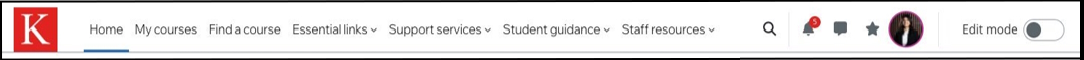

1. Top Menu:clarity through card sorting

Challenge: Students reported difficulty locating key information, and the top menu occupied excessive screen space when scrolling.

Solution: A card-sorting exercise informed a redesigned top menu with clearer labels, reduced height, and improved responsiveness. The result is a more intuitive navigation experience that enhances content discoverability.

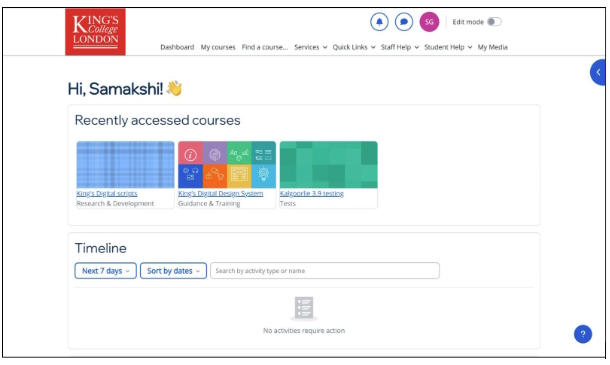

2. Dashboard: prioritising key tasks

Challenge: Usage data revealed that the dashboard experience lacked clarity and focus, with several competing elements diluting its effectiveness. In particular, the Timeline feature – especially important on mobile – was under-emphasised and not as accessible as it could be.

Solution: Through three design iterations, the dashboard was simplified, the Timeline was elevated in prominence, and visual hierarchy was improved. These changes support a more task-focused experience for students.

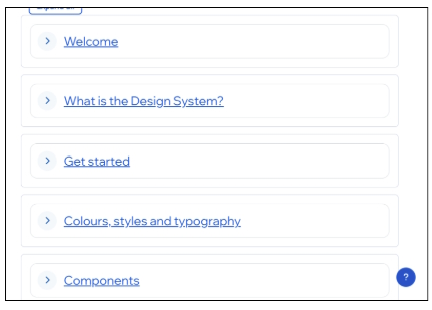

3. Custom Section course format: consistency for focus

Challenge: Inconsistent course formats and reliance on unsupported plug-ins created usability and maintenance challenges.

Solution: The Moodle-native Custom Sections format was restyled to align with institutional design guidelines. This enables consistent use across KEATS, supporting a more cohesive and accessible learning experience.

The Impact

Despite the ambitious timeline, the initiative delivered measurable improvements:

- Positive sentiment quadrupled in usability testing

- 100% of students in guerrilla testing rated the new dashboard as “good” or “excellent”

- Users described the platform as “cleaner,” “more modern,” and “easier to navigate,” particularly on mobile devices

These enhancements, while incremental, had a meaningful impact on the overall user experience.

Looking ahead

This project laid the groundwork for a longer-term UX strategy for KEATS. A dedicated Product Designer has now joined the team to embed user-centred design practices into ongoing development. Continuous iteration, informed by regular feedback, will guide future improvements.

The next SUS survey will provide further insights into user needs and preferences. Staff and students are encouraged to participate and help shape the future of KEATS.

Final thoughts

KEATS 2025 represents more than a visual update – it marks a shift toward proactive, user-led development. By prioritising usability and aligning design with real user needs, King’s is taking meaningful steps toward delivering a better digital learning experience.

🙋♀️ Want to chat more about UX in education or share your KEATS experience?

Our next Coffee & UX ☕ event is on 6th November (9am to 12pm), and we’re inviting both presenters and participants of the wider community to join us at King’s. If you’re interested in UX in Education — whether to share, learn, or just chat over coffee — we’d love to see you there.

More information and sign-up can be found at this website.

About the author

Juliana Matos, UX Manager at King’s College London | Experienced LXer | Bridging academia, education, and industry through thoughtful, data-driven, human-centred UX strategy.

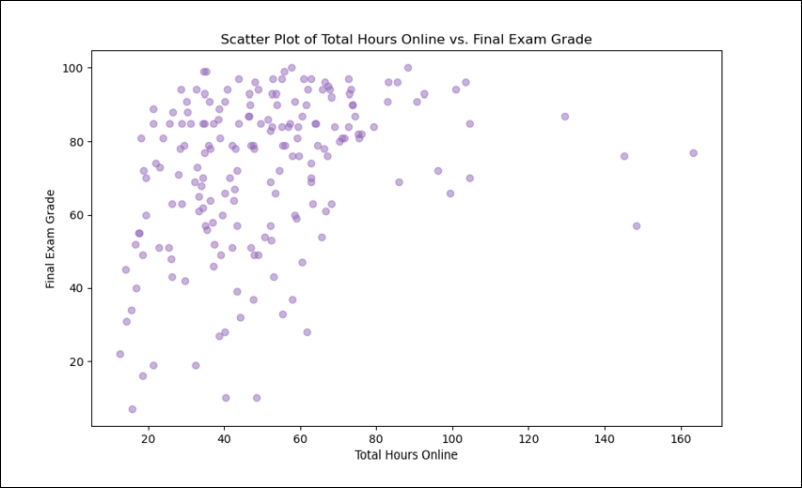

My name is Eleonora Pinto de Moura, and I’m a Lecturer in Mathematics Education at King’s Foundations. My research interests include educational technology, data-driven teaching strategies, and enhancing student engagement through innovative learning digital tools.

My name is Eleonora Pinto de Moura, and I’m a Lecturer in Mathematics Education at King’s Foundations. My research interests include educational technology, data-driven teaching strategies, and enhancing student engagement through innovative learning digital tools.