This week for our S blog, we bring a post on the important issue of statistical significance, written by a guest blogger from the Said & Dunn blog, led by Dr Erin Dunn. This post is by Khalil Zlaoui, a graduate student in the Dunn Lab. We are very grateful to him for sharing this content with us. The standard measure of statistical significance in science (i.e. whether you believe a result is true) is when your effect is associated with a p value of less than 0.05. What this means is that you would expect that finding by chance no more than one in twenty times. Here Khalil discuss various problems with that approach and suggestions that have been made to address them.

Scientific studies often begin with a hypothesis about the way the world works. The hope is that by analyzing data we can find empirical evidence to uncover the truth, by either supporting or refuting our original hypothesis.

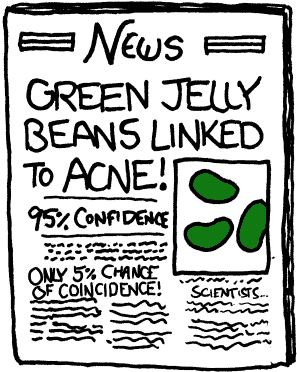

For decades, the scientific community has relied on the p-value as the sole indicator of that truth. But a significant p-value is not proof of strong evidence, and in fact, it was never intended to be used as such. Its misuse and misinterpretation has led to serious problems, including an interdisciplinary replication crisis that we describe in a previous Said&Dunn post .

“Should more journals ban p-values altogether?”

Benjamin et al. (2017) recently made a proposal to change the default p-value threshold (from 0.05 to 0.005) for claims of new discoveries, a proposal met with some controversy. Some journals, like Basic and Applied Social Psychology, have also gone so far as to ban the use of p-values.

Is tightening the p-value threshold a good idea? Should more journals ban p-values altogether? After wading into the waters around the promises and pitfalls of p-values, here are four main conclusions we have drawn.

1) P-VALUES SHOULD BE INTERPRETED WITH CAUTION

In 2016, the American Statistical Association (ASA) argued that while the p-value is a useful measure, it has been misused and misinterpreted in weighing evidence. The ASA board therefore released six principles to guard against common misconceptions of p-values:

- P-values can indicate how incompatible the data are with a specified statistical model. As models are constructed under a set of assumptions, a small p-value indicates a model that is incompatible with the null hypothesis, as long as these assumptions hold.

- A common misuse of p-values is that they are often turned into statements about the truth of the null hypothesis. P-values do not measure the probability that the studied hypothesis is true. They also do not indicate the probability that data were produced by random chance alone.

- Scientific conclusions and business or policy decisions should not be based only on whether a p-value passes a specific threshold. Conclusions based solely on p-values can pose a threat to public-health. In addition to model design and estimation, factors to be considered in decision-making include study design and measurement quality.

- Proper inference requires full reporting and transparency. Conducting several tests of association in order to identify a significant p-value leads to spurious results.

- A p-value, or statistical significance, does not measure the size of an effect or the importance of a result. A smaller p-value is not an indicator for a larger effect.

- By itself, a p-value does not provide a good measure of evidence regarding a model or a hypothesis. A p-value near 0.05 is only weak evidence against the null.

2) THERE ARE ALTERNATIVES TO P-VALUES

An interesting alternative to p-values is using a Bayesian approach, a method of statistical inference that includes a subjective “prior” belief about the hypothesis, based on Bayes theorem.

From Bayes theorem: POSTH1=PRIORH1 X BF, where POST H1 is the posterior odds in favor of H1 (the alternative hypothesis) and BF = sampling density of data under H1 / sampling density of data under H0 is the Bayes factor.

Interestingly, Bayes Factors can be equated with p-values. The correspondence between p-values in the frequentist world (meaning the statistical inference framework that is most commonly used) and Bayes Factors in the Bayesian world can reshape the debate about p-values and help reconsider how strongly they can support evidence to reject the null.

Under some reasonable assumptions, a p-value of 0.05 in the frequentist world corresponds to Bayes Factors in favor of the alternative hypothesis ranging from 2.5 to 3.4. In the Bayesian world, this is considered as weak evidence against the null. Based on the correspondence between p-values and Bayes Factors, Benjamin et al. (2017) proposed to redefine statistical significance at 0.005.

3) TIGHTENING THE P-VALUE THRESHOLD DOESN’T FULLY SOLVE THE REPLICATION CRISIS AND COULD LEAD TO OTHER PROBLEMS

“p-values are not the only cause for the lack of reproducibility in science”

A two-sided 0.005 p-value corresponds to Bayes Factors in favor of the alternative ranging from 14 to 26, which in Bayesian considerations corresponds to substantial to strong evidence. Benjamin et al.’s proposal to change the p-value threshold was made to help address the replication crisis, where too few studies were able to replicate the findings of the original study. But moving to this more stringent threshold comes at a price – the need for larger samples and possibly unacceptable false negative rates. Is such a trade-off worth it?

It’s important to keep in mind that p-values are not the only cause for the lack of reproducibility in science. While p-values might be an important contributor, there are other real issues affecting replication, including: selection effects, trends towards multiple testing, hunting for significance or p-hacking, violated statistical assumptions, and so on. Tightening the p-value from 0.05 to 0.005 will not necessarily address these issues.

4) BUT THERE ARE ALTERNATIVES WE SHOULD ALL BE USING

Some statisticians have argued in favor of estimation (putting emphasis on the parameter to estimate an effect) over testing (putting emphasis on rejecting or accepting a hypothesis based on p-values). If the interest lies in testing an effect, researchers could instead rely on confidence, credibility, or prediction intervals.

So in the end, we think that rather than ditch the p-value altogether, we should shift our focus from p-values to study design, effect size and confidence intervals, which we hope can help us better understand the evidence to support our hypotheses and ultimately uncover the truth about the way the world works.

[…] Here is a useful piece from the Edit Lab. […]