This is an evaluation guide.

What is it?

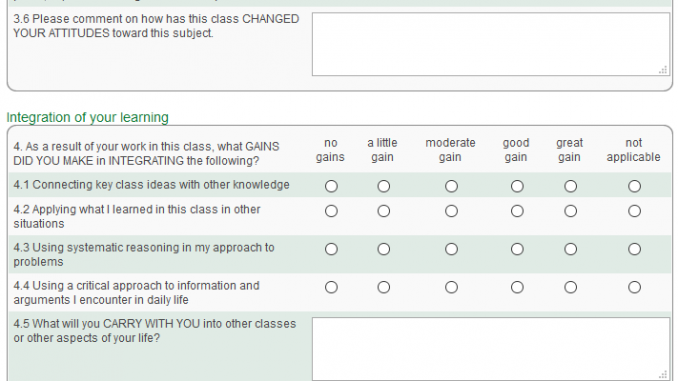

A questionnaire is an efficient, economical way to obtain a large amount of responses. When administered digitally, questionnaires scale up with minimal extra resource since they tend to use closed questions such as multiple choice and scales (if you need to ask open questions consider interviews instead). These questions generate standardised, quantitative, optionally anonymous data which can shed light on hypotheses and assumptions. Good questionnaires are focused, relevant, generate only useful data, and are careful with respondents’ time and energy (Cohen et al, 2017, p498).

There’s a lot to know about questionnaires – though don’t let that deter you from getting started. This guide aims to introduce some basic and less-obvious principles, and refer you to some education-specific handbooks for further information.

What can it tell me about teaching?

Through closed questions, a questionnaire can elicit information about students’ attributes, acts, behaviours, preferences and other attitudes. Be ready to triangulate questionnaire data with other methods, since questionnaires often raise further questions which need to be probed further through interviewing a sample of respondents or more naturalistic methods.

How could I use it?

For example:

- To gather mid-term feedback from students.

- Before and after trying something new.

How can I collect the data or evidence?

First specify your aim.

Once your aim is specified, the next stage is to operationalise its concepts into variables which can be measured with the kinds of closed questions used in most questionnaires. Start by searching the literature for an existing questionnaire you could adapt to your purposes. One example – Lizzio and Wilson (2008) used both open and closed questions in their questionnaire eliciting students’ perceptions of quality of feedback. If you can’t find anything, you can author or assemble questions yourself; Maurer (2018) recommends discussing your questionnaire with an experienced questionnaire designer if you can find one. Kember and Ginns (2012, p57) recommend preliminary informal chats – e.g. with students and colleagues – to inform the questions. To understand differences within your sample, include questions about, say, ethnicity and disability which will allow you to analyse your data for interactions.

Identifying who should respond to your questionnaire is known as sampling. Questionnaires scale up with minimal resource implications so you may be able to reach an entire population e.g. all undergraduates on a given programme (always being careful of respondents’ time). As a general rule a sample should be as representative as possible of the wider population you’re interested in.

For a practical overview of questionnaire design and question authoring, see the relevant chapters in ‘Evaluating teaching and learning: a practical handbook for colleges, universities and the scholarship of teaching‘ (Kember and Ginns, 2012). Here are some of their questions for students, taken from the item bank of individual questions which can be freely downloaded from the publisher’s site:

- I sometimes question the way others do something and try to think of a better way.

- As long as I can remember handout material for examinations, I do not have to think too much.

- I often re-appraise my experience so I can learn from it and improve for my next performance.

- I feel that virtually any topic can be highly interesting once I get into it.

- I find I can get by in most assessments by memorising key sections rather than trying to understand them.

- I come to most classes with questions in mind that I want answering.

For a more in-depth yet concise examination of possible question items, see Chapter 24 in ‘Research methods in education. 8th edition‘ by Cohen, Manion and Morrison. King’s Library offers this in digital and paper format.

Although digital data collection makes processing and analysis far more efficient, it can negatively affect the response rate. One compromise is to facilitate collecting the data digitally during a session using PollEverywhere or a Microsoft Form. One exhilarating, if initially hair-raising, possibility with PollEverywhere is to enable respondents to upvote or downvote anonymous responses depending on agreement or disagreement (this introduces focus-group-type qualities at scale, and has the extra benefit of performing some of the data analysis during the session). It is important to explicitly reassure participants that divergent responses are valued and welcome even if nobody else agrees.

If the questionnaire is new, pilot it first. Kember and Ginns (2012) give some guidance on testing reliability and Maurer (2018) describes some approaches to validating the questions (including talking with pilot respondents).

Software – if you’re at King’s there are a couple of options. For a straightforward questionnaire with a few different question-types and ability to branch, try Microsoft Forms (sign in with your k-number@kcl.ac.uk credentials). If you need something more powerful contact IT for an account with Jisc Online Surveys.

How can I analyse the data or evidence?

Before finalising the questionnaire, return to your intial question and consider your approaches to analysing the data. Part 5 of Cohen et al (2017) has practical chapters on analysing quantitative and qualitative data. Vicki Dale (2014) summarises some approaches to summarising and visualising the data which are friendly to relatively inexperienced evaluators, as follows.

Descriptive statistics summarise the data in ways which can be visualised using e.g. Excel or SPSS. You can report:

- Measures of central tendency of the data, including the mean (average), median (middle value) and mode (most frequent value).

- Measures of dispersion (also known as spread) of the data, including standard deviation, range, and interquartile range.

To measure the difference between two or more groups, your choice of tests depends on the kind of data you have. Yes/no questions, multiple choice questions and scale questions are analysed in a different way from continuous data (e.g. years or money). See Chapter 41 of Cohen et al (2017) for some straightforward guidance.

Cohen and colleagues (2017, p501) caution that the response rate for questionnaires is likely to be considerably lower than 50%. Generally 30 responses is the lowest threshold for statistical analysis.

What else should I know?

Respondent fatigue, particularly in heavily-surveyed populations like university students, compromises the value of open questions. Because of the effort involved in completing and analysing open questions, Kember and Ginns (2012, p57) advise only using them if you are committed to making use of the data. Aim to keep the questionnaire interesting and brief, focused and relevant. Consider whether you can obtain the information in a way which is more rewarding and interesting for participants. Generally avoid asking questions if you could source the answers elsewhere.

The limitations of questionnaires include the several kinds of bias inherent to self-reporting. In a research article which sent ripples through the active learning community, Deslauriers and colleagues (2019) found a discrepancy between what students felt they had learned (elicited through a questionnaire) and what they had actually learned (elicited through a test).

Another limitation is the inability to probe. This is why Maurer (2018) says he has not found questionnaires useful for exploring phenomena which have not previously been researched, or which have not been researched in a particular discipline. Instead he recommends open ended interview questions which can be followed up with further clarifying questions.

For the kind of internal purposes this guidance mainly supports, you don’t need ethical approval (though we would love to you consider publishing beyond King’s, in which case you do need it). That said, it’s important to adhere to ethical principles of gaining informed consent – in particular being clear about confidentiality or identifiability, letting participants know what you will do with their data, and reassuring them that participation will not affect any other part of their study or assessment. King’s colleagues can consult local Research Ethics guidance or contact King’s Academy.

Where can I find examples?

As noted above, Kember and Ginns (2012) have a chapter on Questionnaires which introduces some existing, adaptable instruments in full. There is also an item bank of individual questions which can be freely downloaded.

One widely-used example is the Critical Incident Questionnaire developed by Stephen Brookfield (2011).

Search the literature for a questionnaire instrument which meets your purposes.

References

- Brookfield, S. (2011) Becoming a Critically Reflective Teacher. San Francisco: Jossey-Bass.

- Cohen, L., Manion, L., & Morrison, K. (2017). Research methods in education. 8th edition. London ; New York: Routledge.

- Dale, V., 2014. UCLe-learning evaluation toolkit. https://discovery.ucl.ac.uk/id/eprint/1462309/ .

- Deslauriers, L., McCarty, L. S., Miller, K., Callaghan, K., & Kestin, G. (2019). Measuring actual learning versus feeling of learning in response to being actively engaged in the classroom. Proceedings of the National Academy of Sciences, 201821936. https://doi.org/10.1073/pnas.1821936116

- Hutchings, P., & Carnegie Foundation for the Advancement of Teaching (Eds.). (2000). Opening lines: Approaches to the scholarship of teaching and learning. Retrieved from https://eric.ed.gov/?id=ED449157

-

Lizzio, A., & Wilson, K. (2008). Feedback on assessment: Students’ perceptions of quality and effectiveness. Assessment & Evaluation in Higher Education, 33(3), 263–275. https://doi.org/10.1080/02602930701292548

- Maurer, T. (2018). Meaningful questionnaires. In: Chick, N. L. (Ed.). (2018). SoTL in action: Illuminating critical moments of practice (First edition). Sterling, Virginia: Stylus.

- Kember, D., & Ginns, P. (2012). Evaluating teaching and learning: A practical handbook for colleges, universities and the scholarship of teaching. London ; New York: Routledge.

Leave a Reply