Problem

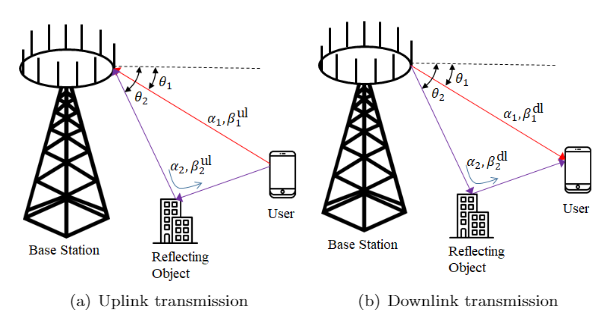

Most cellular deployments rely on frequency division duplex (FDD) due to its lower latency and greater coverage potential. In FDD, uplink and downlink channels use different carrier frequencies. Therefore, as illustrated in Fig. 1, with FDD, downlink channel state information (CSI) cannot be directly obtained from uplink pilots due to a lack of full reciprocity between uplink and downlink channels.

Fig.1 FDD massive MIMO system over multipath channels with partial reciprocity

The conventional solution to this problem is to leverage downlink training and feedback from the devices. This, however, generally causes a prohibitively large downlink and uplink overhead in massive multiple-input multiple-output (MIMO) systems owing to the need to transmit a pilot sequences of length proportional to the number of antennas.

State-of-the-art recent proposals to address the inefficiencies of this conventional solutions adopt machine learning (ML) tools. The use of ML is justified by the technical challenges arising from the lack of efficient optimal model-based methods.

In our recent work to be presented at SPAWC 2021, we contribute to this line of work by introducing a new ML-based solution that improves over the state of the art by leveraging partial channel reciprocity and the tool of hypernetworks.

Our approach

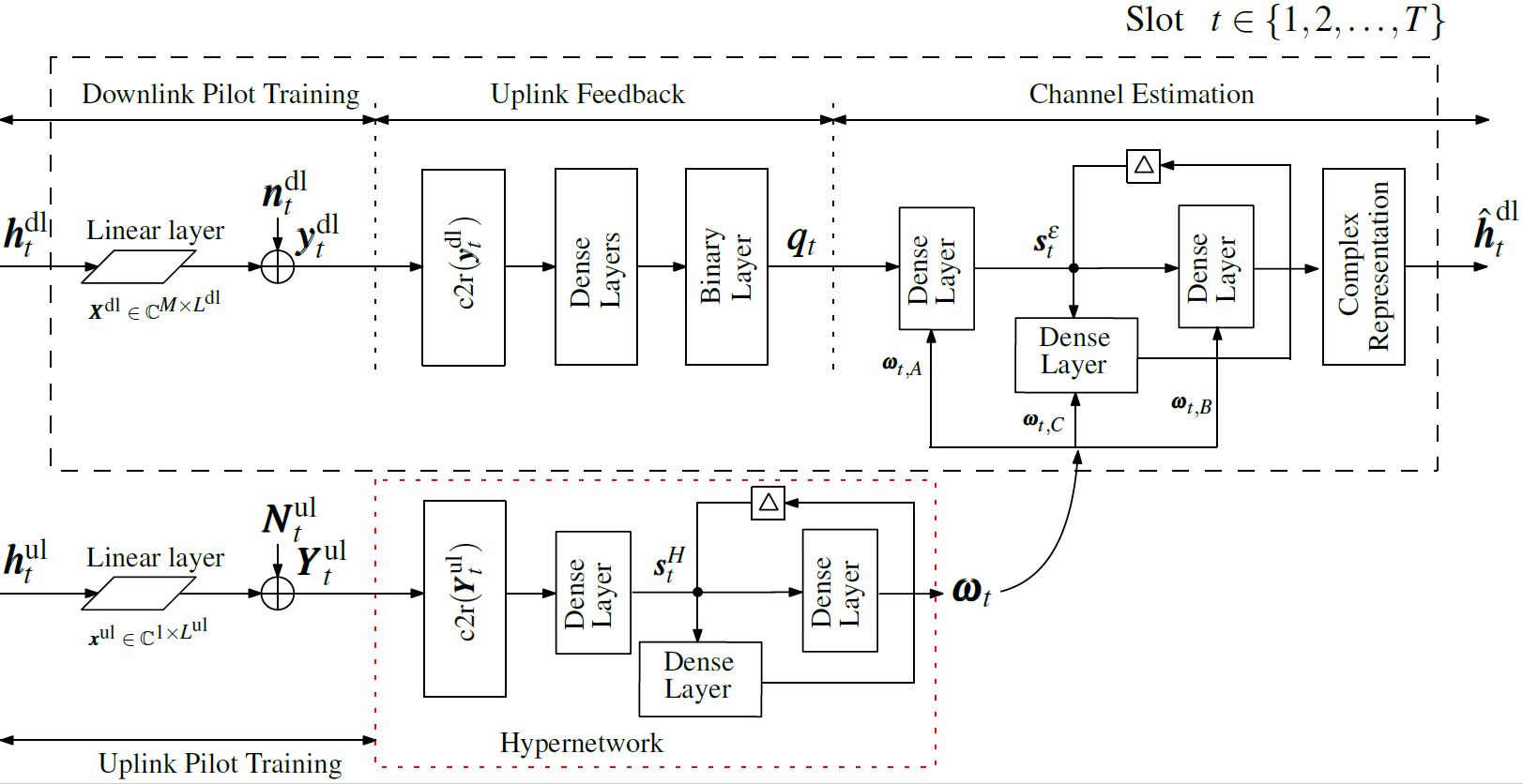

Fig. 2 The proposed HyperRNN architecture for end-to-end channel estimation based on temporal correlations and partial reciprocity

In this work, we propose a novel end-to-end architecture — HyperRNN — illustrated in Fig. 2. The main innovation of the approach is that simultaneously transmitted pilot symbols in the uplink, across multiple time slots, are leveraged to automatically extract long-term reciprocal channel features (see Fig. 1) via a hypernetwork that determines the weight of the downlink CSI estimation or beamforming network. Importantly, the long-term features implicitly underlie the discriminative mapping implemented by the hypernetwork between uplink pilots and downlink CSI estimation network, rather than estimated explicitly. The second main innovation is to incorporate recurrent neural networks (RNNs), in lieu of (feedforward) deep neural networks (DNNs) for both uplink and downlink processing in order to leverage the temporal correlation of the fading amplitudes.

Results

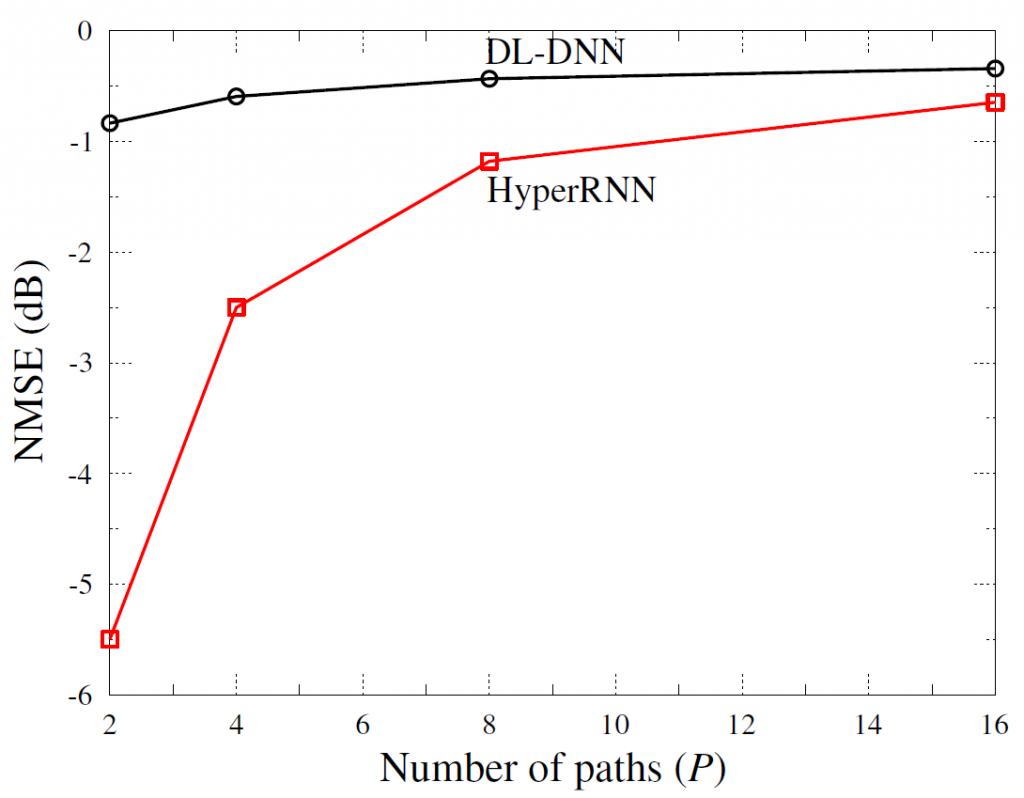

We compare the the normalized mean square error (NMSE) performance of our proposed HyperRNN and an earlier work based on end-to-end training procedure, downlink-based DNN (DL-DNN), which encompasses downlink pilot training, distributed quantization for the uplink and downlink channel estimation. Simulations are performed over the spatial channel model (SCM) standardized in 3GPP Release 16. Fig. 3 demonstrates the NMSE of the proposed HyperRNN and of the benchmark DL-DNN for channel estimation as a function of the number of paths. Larger performance gains can be achieved when the channel has a lower number of paths. In fact, in this regime, the invariant of the long-term features of the channel defines a low-rank structure of the channel that can be leveraged by the hypernetwork.

Fig. 3 NMSE of the HyperRNN and DL-DNN over frequency-flat fading channels having different number of paths for an FDD system

Full paper can be found here.

Recent Comments