Problem

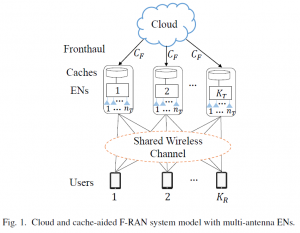

Content delivery is one of the most important use cases for mobile broadband services in 5G networks. As seen in Fig. 1, in 5G systems, content can be potentially stored at distributed units, or edge  nodes (ENs), and hence closer to the user, with the aim of minimizing delivery latency and network congestion. Furthermore, a cloud processor, also known as central unit, has typically access to the content library and connects to the ENs via finite capacity fronthaul links. The central unit is not only necessary to enable content delivery when the overall edge cache capacity is insufficient, but it can also foster cooperative transmission from the ENs to the users by sharing common information to the ENs. However, any transmission from cloud unit to the ENs comes at a latency cost due to the use of fronthaul links. How should edge and fronthaul resources be optimally combined to minimize delivery latency?

nodes (ENs), and hence closer to the user, with the aim of minimizing delivery latency and network congestion. Furthermore, a cloud processor, also known as central unit, has typically access to the content library and connects to the ENs via finite capacity fronthaul links. The central unit is not only necessary to enable content delivery when the overall edge cache capacity is insufficient, but it can also foster cooperative transmission from the ENs to the users by sharing common information to the ENs. However, any transmission from cloud unit to the ENs comes at a latency cost due to the use of fronthaul links. How should edge and fronthaul resources be optimally combined to minimize delivery latency?

In a recent work just published on IEEE Transaction on Information Theory, we provided a conclusive answer to this question by taking an information-theoretic viewpoint, and making the following simplifying assumptions:

1) only uncoded edge caching is allowed;

2) the cloud can only send fractions of contents via the fronthaul links;

3) the ENs are constrained to use standard linear precoding on the wireless channel;

4) The signal to noise ratio is sufficiently large.

Some Results

Our work derives a caching and delivery policy that is able to offer a near optimal trade-off between fronthaul latency overhead and downlink transmission latency from the ENs to the users. Two key scenarios are identified that depend on key system parameters such as fronthaul capacity, edge cache capacity, and number of per-edge node antennas:

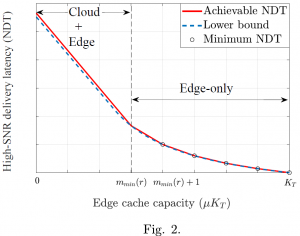

1) When the overall cache capacity of the ENs is smaller than a given threshold, as illustrated in Fig. 2, it is necessary to use both fronthaul and edge caching resources in order to minimize latency.  Importantly, even when the edge resource alone would be sufficient to deliver all requested contents, the policy, it is generally required to make use of fronthaul resources in order to foster EN cooperative transmission. In fact, when the fronthaul capacity is sufficiently large, the latency cost caused by a fronthaul delay does not offset the cooperative transmission gains in the downlink;

Importantly, even when the edge resource alone would be sufficient to deliver all requested contents, the policy, it is generally required to make use of fronthaul resources in order to foster EN cooperative transmission. In fact, when the fronthaul capacity is sufficiently large, the latency cost caused by a fronthaul delay does not offset the cooperative transmission gains in the downlink;

2) Otherwise, when edge cache capacity is above the given threshold, as seen in Fig. 2, only edge caching should be used. Under this condition, the gains due to enhanced EN cooperation do not overcome the latency associated with fronthaul transmission. Interestingly, the threshold on the edge cache capacity increases as the number of ENs’ antennas increases, since edge processing becomes more effective when more antennas are deployed.

The full paper can be found at ieeexplore (open access: arxiv)

Recent Comments