The scientific process is being used to help researchers reflect on the work being conducted in different fields of science (an approach known as ‘meta-science’). Today, Tom McAdams discusses how meta-science is changing the way scientists understand and approach their work.

The Oxford online dictionary defines the scientific method as “a method of procedure that has characterized natural science since the 17th century, consisting in systematic observation, measurement, and experiment, and the formulation, testing, and modification of hypotheses.” If we are rigorous in our application of the scientific method, then we should arrive at the truth. So, does it work? Well, in a word yes, but if you need convincing then here is a very entertaining (short) clip of Richard Dawkins putting it better than I ever could… https://www.youtube.com/watch?v=0OtFSDKrq88.

“If we are rigorous in our application of the scientific method, then we should arrive at the truth.”

But are we to believe that science is infallible? Well, not all science is good science, even the best scientists make mistakes, and spurious findings will inevitably occur. There have even been high profile examples of disreputable scientists who have fabricated data in order to boost their own careers (e.g. https://www.newscientist.com/article/dn21118-psychologist-admits-faking-data-in-dozens-of-studies/). However, the scientific process is supposed to be robust to such issues, and over time ought to be self-correcting. Replication is an important part of this process. That is, when new findings are reported, attempts should be made to replicate the results, preferably by different researchers, in a different sample, perhaps using different instruments or methods. This is intended to ensure that the original results were not somehow spurious. If the findings are replicated, then they are more likely to be ‘real’. If not, then people are less likely to believe them.

“…not all science is good science, even the best scientists make mistakes…”

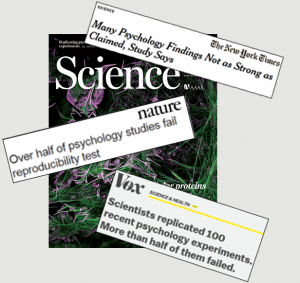

A major problem however, is that scientists are human beings (strange human beings we may be, but human nonetheless) and this introduces bias into the scientific process. Researchers are under pressure to publish, and results are far more likely to be published (and published in higher impact journals) when they are, novel, exciting, and statistically significant. This can introduce ‘skew’ into the literature, whereby scientists and journal editors focus their efforts on identifying and publishing novel, significant findings. Less novel or non-significant findings get side-lined. This can mean that replication attempts and negative findings are overlooked or not published. It can also mean that researchers go ‘fishing’ in their datasets in an attempt to find significant findings. In recent times this behaviour has contributed to what some have termed the ‘replicability crisis’. This refers to the situation in which scientists have found that many scientific findings simply do not replicate. This constitutes a major problem for scientists, perhaps most memorably summarised by John Ioannidis in his provocatively titled article “Why most published research findings are false”.

A major problem however, is that scientists are human beings (strange human beings we may be, but human nonetheless) and this introduces bias into the scientific process. Researchers are under pressure to publish, and results are far more likely to be published (and published in higher impact journals) when they are, novel, exciting, and statistically significant. This can introduce ‘skew’ into the literature, whereby scientists and journal editors focus their efforts on identifying and publishing novel, significant findings. Less novel or non-significant findings get side-lined. This can mean that replication attempts and negative findings are overlooked or not published. It can also mean that researchers go ‘fishing’ in their datasets in an attempt to find significant findings. In recent times this behaviour has contributed to what some have termed the ‘replicability crisis’. This refers to the situation in which scientists have found that many scientific findings simply do not replicate. This constitutes a major problem for scientists, perhaps most memorably summarised by John Ioannidis in his provocatively titled article “Why most published research findings are false”.

“…scientists are human beings (strange human beings we may be, but human nonetheless) and this introduces bias into the scientific process.”

So, what is being done to address this crisis? Many fields of research now encourage the pre-registration of studies, particularly randomised controlled trials. This pre-registration can involve pre-planning analyses, the intention being to reduce the likelihood of subsequent fishing expeditions. Some fields are ahead of others. For example, in statistical genetics the vast quantities of data required to identify which of a million or so genetic variants are associated with a trait has led to the development of new methods and approaches to avoid the problems of false positive findings and the challenges of replication (see Joni’s post for a bit more on this). Other subjects fare less well. Psychology is amongst those fields often highlighted as being very much in the midst of a replicability crisis. For example, ‘The Reproducibility Project: Psychology’, an attempt at reproducing 100 published psychology studies, found that only 36% of attempted replications led to statistically significant results. Effect sizes were also about half the size of those originally reported. This is a major cause for concern and casts doubt on the way that science is being conducted.That said, there is something of a silver lining to be gleaned from this. Like the Ioannidis article, the Reproducibility Project: Psychology embodies an attempt at meta-science – the scientific study of scientific findings. Such rigorous attempts at self-critique should be lauded as demonstrating an eagerness on the part of (at least some of) the scientific community to identify and address their own shortcomings. Many other researchers are engaged in meta-scientific endeavours, ranging from further attempts at mass replication, the detailed study of publication biases, and attempts to detect the extent and consequences of ‘p-hacking’ within the scientific literature (said to be common but the effects are likely to be weak). Some researchers have even written a program to check for statistical errors in the already published articles of other scientists (apparently, it hasn’t made them very popular!) Even meta-scientific studies are getting the meta-science treatment. For example, The Reproducibility Project has faced detailed rebuttals by those who believe their methods were flawed and their conclusions incorrect. So, is meta-science the answer? Certainly meta-science is helping to identify and understand biases where they exist (although like all science it is itself subject to the need for replication and self-correction). This increased awareness of issues surrounding replicability is undoubtedly a positive thing. However, dealing with them will of course require more than just awareness – it will need a willingness on the part of scientists to critique and moderate their own behaviour, both at the level of the community and the individual.