Clinical predictions are based on past experiences of a given population. In this post David Armstrong, Professor of Medicine and Sociology at King’s College London tells us how the idea of a population has informed new way in which to predict clinical outcomes. The full article can be accessed here.

A few years ago I completed an online questionnaire based on the first deaths among half a million people recruited to the UK Biobank cohort. I found it reassuring to find that my life expectancy was of someone 10 years or so younger; but then I thought how odd to estimate the extent of my life in the future from counting dead bodies in the past. This only made sense if it was assumed that I belonged to the same ‘population’ as all those dead people despite my having no knowledge of who they were or of what we shared (other than similar age ranges).

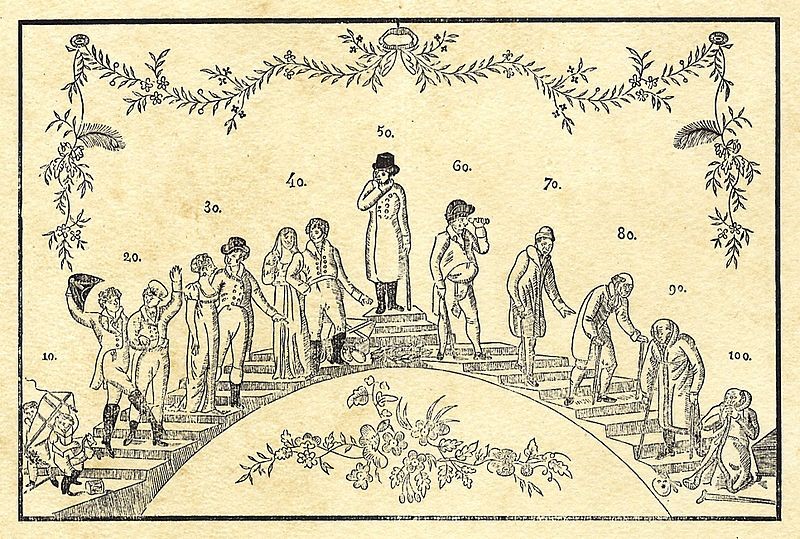

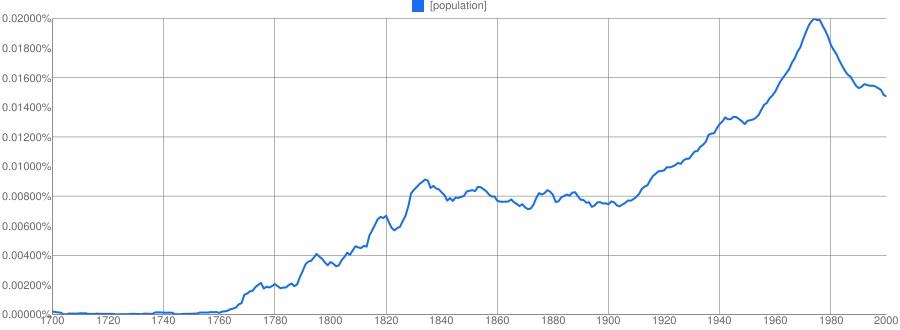

In the past there have been many systems for predicting the future, astrology and horoscopes, crystal balls and Tarot cards, etc, but today we rely on the idea of a population. That idea is relatively recent: the word itself only came into use towards the end of the 18th century. Prior to that, a city like London might be described as ‘populous’, but there was no perception that its citizens could be counted. That changed at the end of the 18th century as enumerating the size of the population began to become routine especially in the decennial Censuses first started in the UK in 1801. These population counts provided a denominator throughout the 19th century for examining deaths and comparing death rates between different towns and groups of people.

A population in the 19th century was a collection of unique individuals who could be counted. This began to change at the very end of the 19th century with a new perception of individual variability. If individuals varied, then they could belong to many different populations each defined by the varying characteristic. IQ tests, for example, first developed at the beginning of the 20th-century, allowed the distribution of intelligence in a population to be estimated. Indeed, the idea of individual differences transformed both psychology and statistics with the emergence of a new focus on variability.

When a population had to be counted everyone needed including, but when a population was defined in terms of some specific characteristic it became possible to take a representative sample and from that sample infer to the whole population. In a sense, the sample was not so much a sub-group of a population, but rather the population was an artefact of the sample. This was an important shift. Instead of just being a collection of unique individuals who could be counted, people could belong to many different populations (characterised by IQ, by cancer, by cholesterol levels, etc).

After World War II a major cohort study was started in Framingham in the US to examine the determinants and health consequences of hypertension. The methods in part resembled those of enumeration in the 19th century, but the Framingham study concerned both the geographically located population and, through inference and generalisation to other similar communities, a virtual one. Framingham identified risk factors (first mentioned in 1961) for cardiovascular disease and enabled these to be collected together into predictive tools. The result was new ‘prognostic models’ and ‘predictive tables’, terms first used in the 1990s, that could be used to estimate the health experience of populations far removed from that specific New England town.

Clinical medicine in the 19th century had developed its own predictive system that rejected a future determined by external events, such as used by astrologers and almanacs. Instead, foresight was based on the known natural history of a pathological lesion (though prognoses were generally given in very general terms such as ‘favourable’ or ‘unfavourable’). Prognosis today, however, although it too seems to depend on accurate diagnosis, is in fact based on populations (and sub-sub-populations) who have in the past experienced that particular pathology and provide a more accurate prediction than the old methods of clinical memory. Prediction over the last three decades has therefore once again been exteriorised but this time our future lies not in our stars but in those populations, real and virtual, that govern our lives and deaths. Yet, despite these ‘advances’ in prediction we still have to grapple with the imprecision of inferences from populations to individuals – my predicted life expectancy from UK Biobank tells me about a typical member of ‘my’ population, but not me personally. I am sure there will be other predictive technologies after the reign of populations has come to an end, but I doubt if I’ll live to see them, whatever method is used to predict my life expectancy today.

We recommend the following further reading

What is a population: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3530737/

UK Biobank risk scores (blog): https://www.fightaging.org/archives/2015/07/mortality-risk-analysis-in-a-dataset-of-half-a-million-people/

The views expressed are those of the author. Posting of the blog does not signify that the Cancer Prevention Group endorse those views or opinions.

Share this page

Leave a Reply